Project Duration: 2022.10 - 2023.06

In this project, we implement an exteroceptive sensory data acquisition framework for the quadruped robot Unitree AlienGo. The framework reads sensor data from the robot’s built-in depth camera D435, visual odometer T265, and external lidar VLP-16. Furthermore, the sensor data can be packaged and transferred between devices in the form of ROS topics. With the framework, we subsequently implemented three downstream tasks: SLAM and autonomous navigation for quadruped robot, elevation map-based navigation system for quadruped robot, and an experimental project footage planning for quadruped robot.

In this section, we will give a brief overview of the downstream tasks showed in this page, in the form of demonstration videos and diagrams.

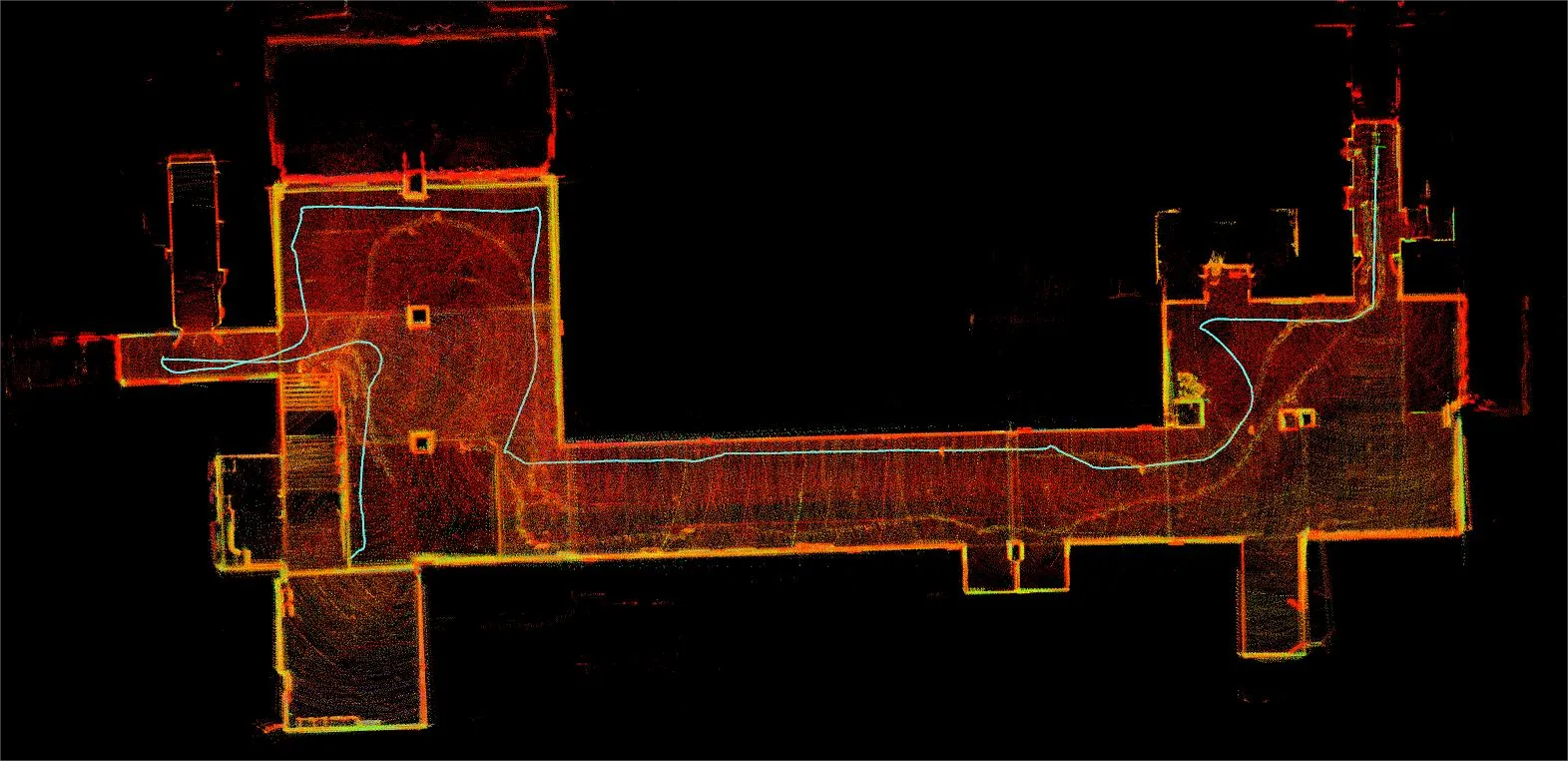

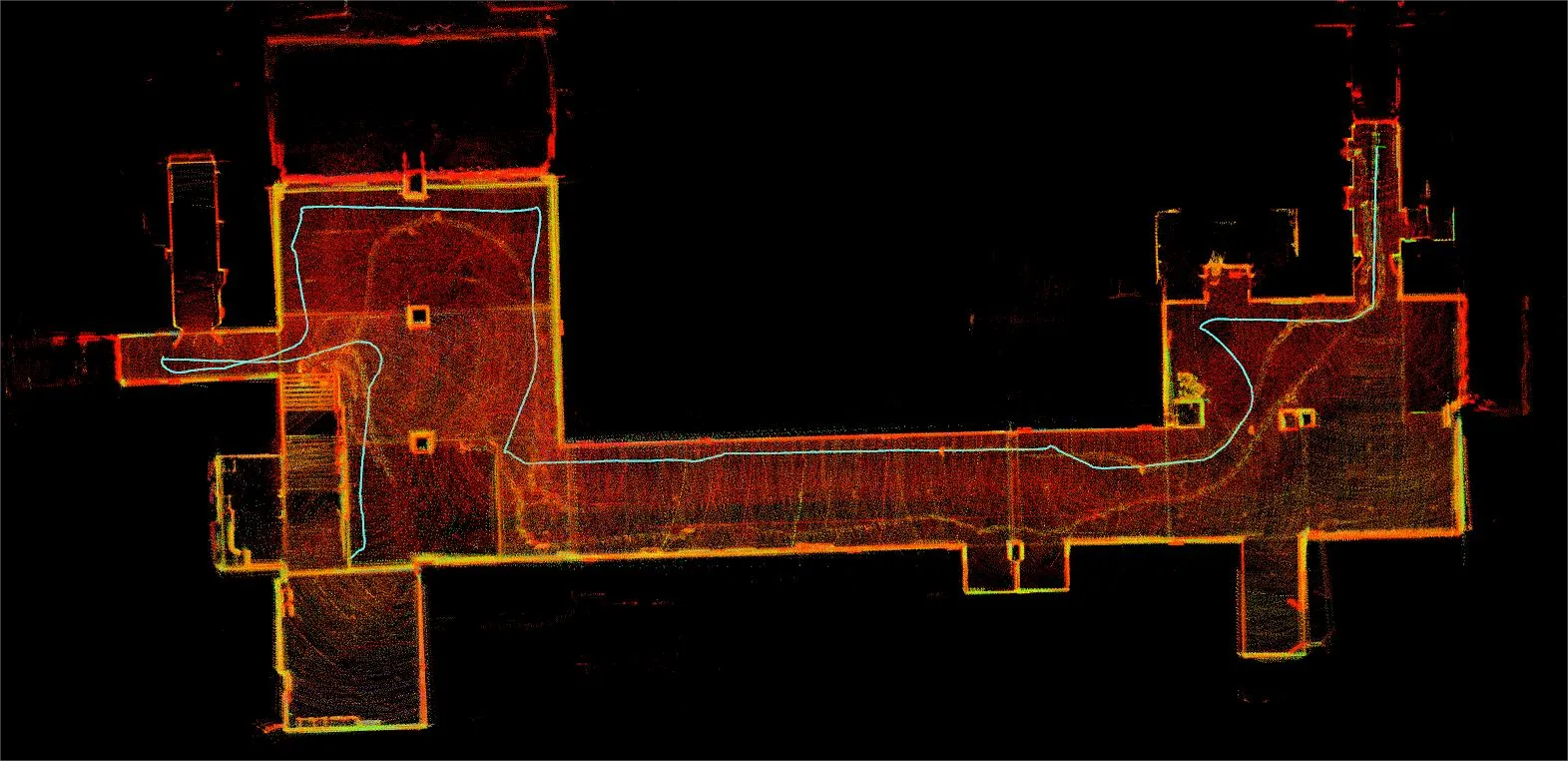

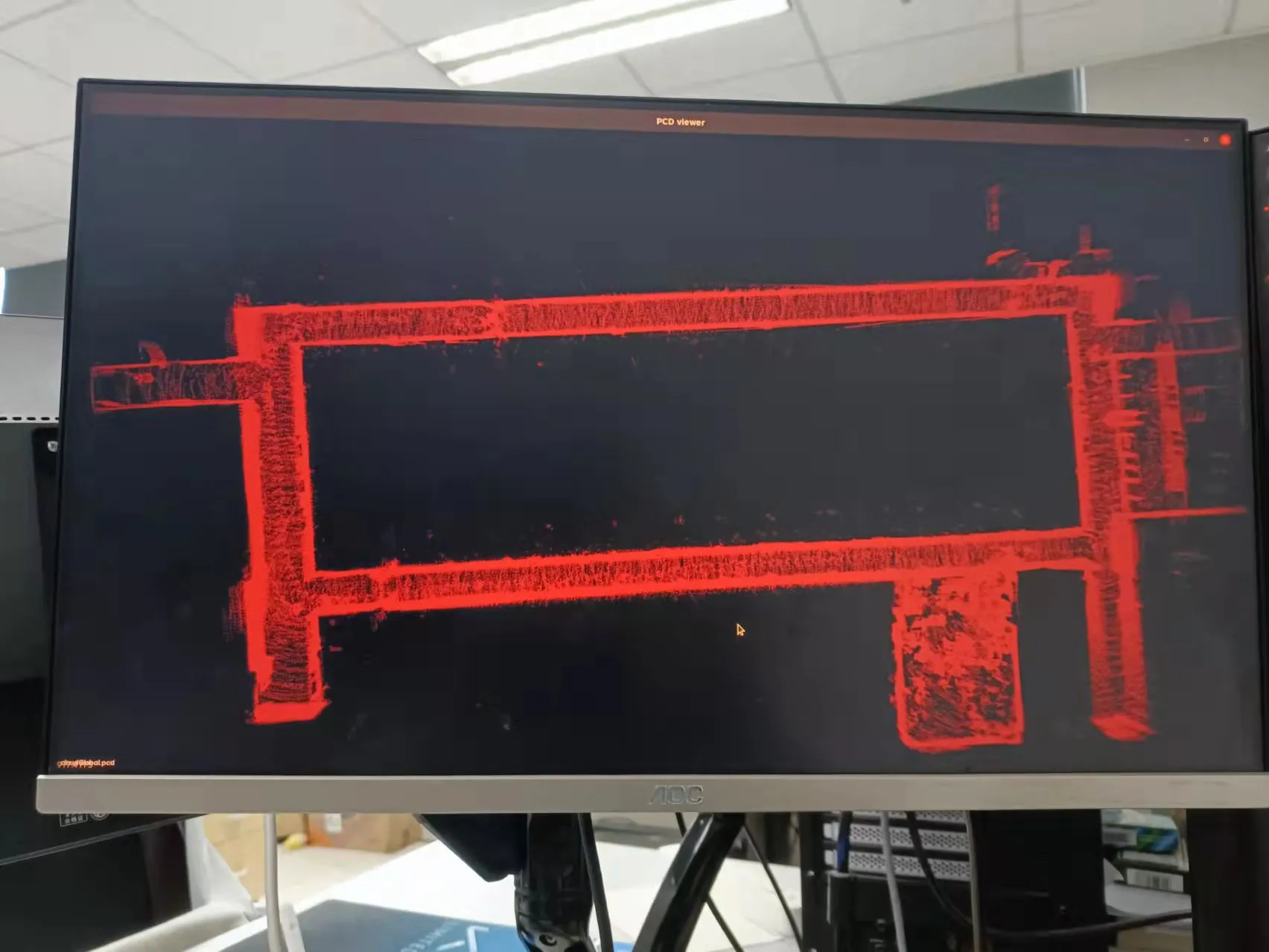

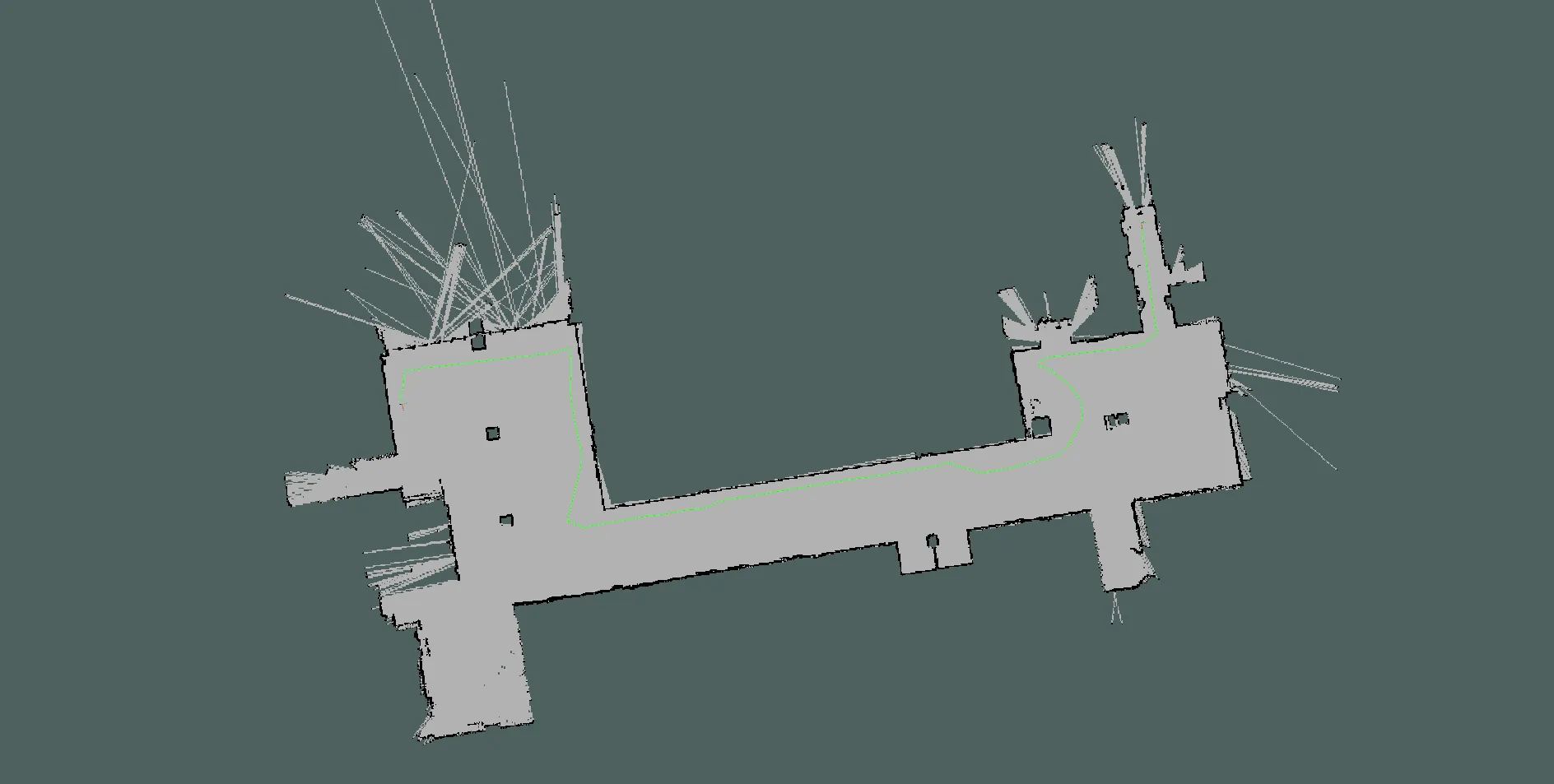

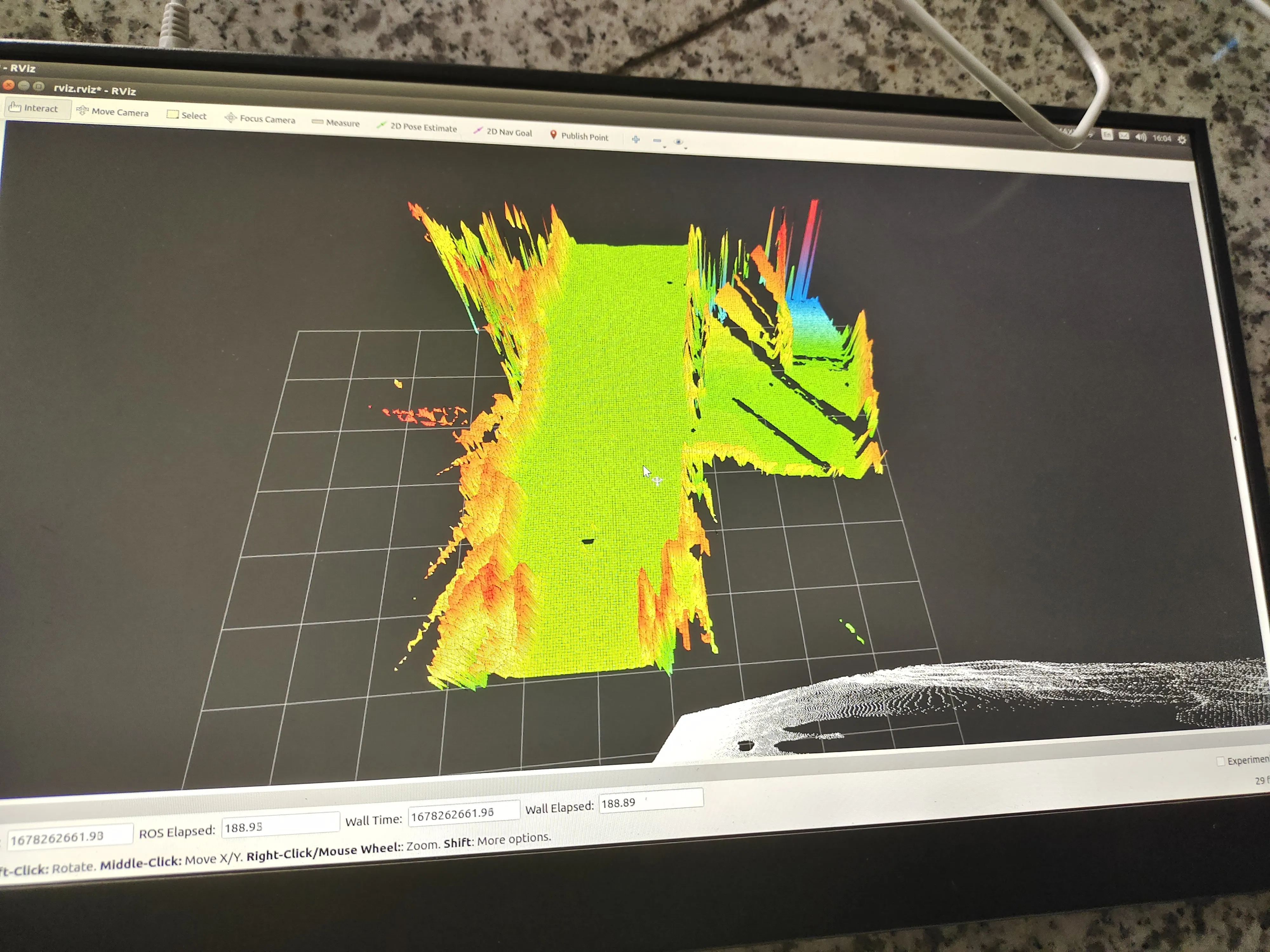

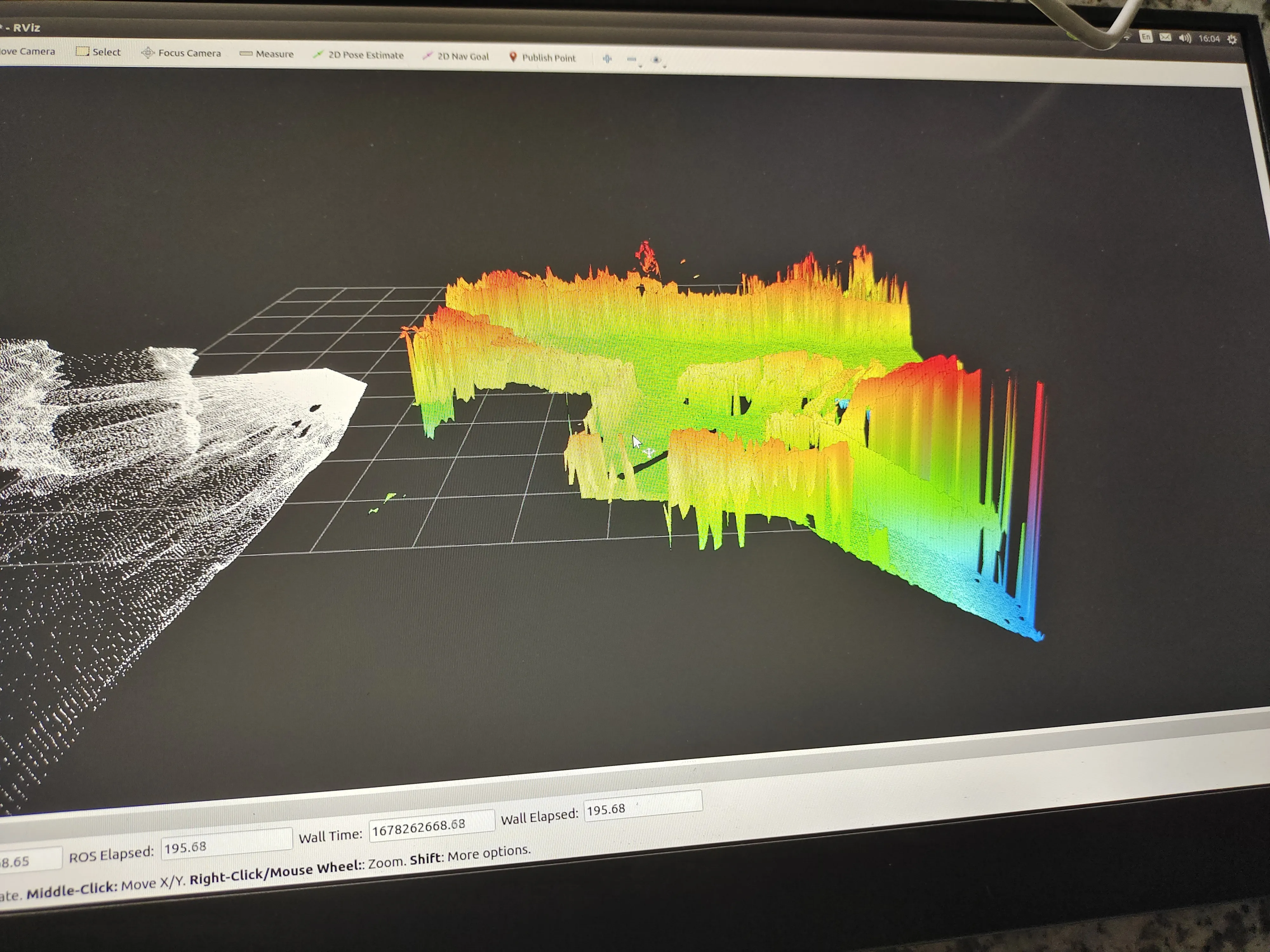

For SLAM task, we use the Gmapping and the LIO-SAM to achieve the construction of 2D planar maps and 3D point cloud maps of the surroundings by the quadruped robot.

For the autonomous navigation task, we implemented a navigation system for quadruped robots based on ROS Navigation Stack.

In this task, we used elevation mapping cupy as an elevation map construction tool. Further, we implemented an autonomous navigation system based on elevation maps, which contains global path planning and local path planning. This system can help the quadruped robot bypass or directly cross different obstacles according to its own locomotion ability.

The task is done based on quad-sdk and focuses mainly on the implementation of footage planning for quadruped robots Unitree AlienGo using surrounding elevation information. Note that some of the following demos incorporate the global path planner gbpl.